Hi all,

After a long beta test (thank you everyone) I’ve finally launched Zotto and I’m really proud of it.

You can read about it some buzz on this previous post.

If you know about it and want to buy it - here’s the purchase link.

What is Zotto?

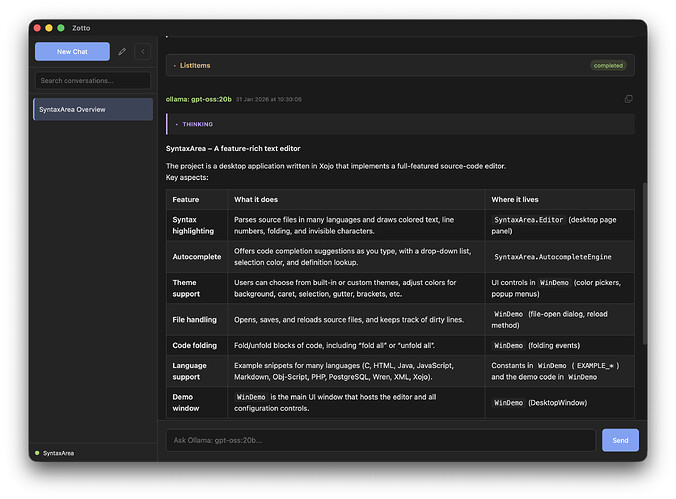

Zotto is a cross-platform desktop app (built entirely in Xojo) that connects directly to the Xojo IDE. It’s designed to be a true “pair programmer” that actually sees and understands the project you are working on.

How is this different from Xojo’s Jade?

It’s all about context.

Zotto doesn’t just guess about your project; it uses a custom-built AST parser (XojoKit) to read your open project structure in real-time. It knows your class hierarchy, your method signatures, and your properties.

Zotto detects the running IDE, open projects and can connect to one project at a time.

It can search through your project to find code snippets and suggest refactoring.

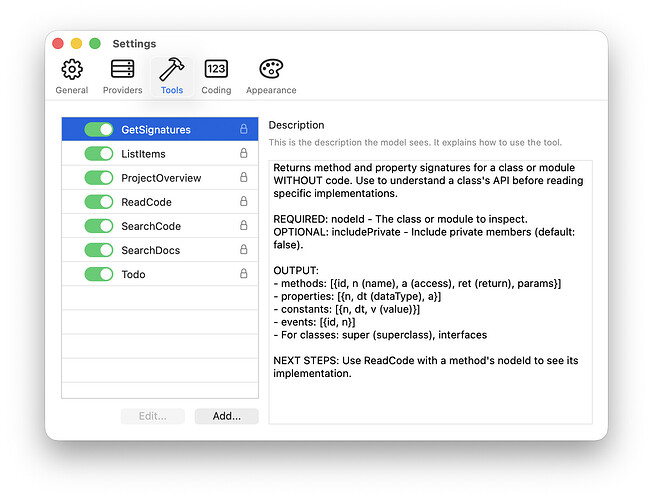

Zotto comes with several built-in tools for interacting with an open Xojo project and even includes a custom tool for offline searching of Xojo documentation to reduce hallucinations.

Because Zotto is context-aware, you can ask, “How do I implement the interface defined in MyCore.Utils?” and it knows exactly what that interface looks like without you pasting it.

I know many developers here are protective of their source code. Zotto is intentionally designed as a read-only assistant. In part due to limitations with Xojo’s IDE scripting (principally speed and the inability to refresh a project from disk without closing the project), Zotto is “read-only” by design. It suggests copy-and-pastable changes you can implement in the IDE. Whilst a little slower than directly writing to disk, it has the benefit of giving you complete control of the code. You remain in full control of what gets implemented.

Models

Zotto is model agnostic. You plug in your own provider. You are not just limited to Anthropic like you are with Jade. Zotto currently supports the following providers:

- LM Studio & Ollama (running either locally on the same computer or reachable over your network)

- OpenAI

- Anthropic

- Google Gemini

- Any OpenAI-Responses API compatible provider (e.g. Grok, OpenRouter)

Major features

- Cross-platform: Runs natively on macOS and Windows.

- Built 100% in Xojo.

- Can be used 100% offline if desired.

- Supports all major LLM providers, both local, on-device and remote proprietary.

- Read only access to your projects - no destructive activity.

- Supports custom MCP servers - supply your own tools if you like.

- Comprehensive built-in tools to read and understand a connected project.

- Full theme engine. Supports light and dark mode and allows customising many aspects of the UI.

- Syntax highlighting of Xojo code (again, colours are customisable).

- Renders the Markdown output by models in realtime.

- Automatically compacts conversations to keep the flow going.

- Lightweight: Uses about 60 MB of RAM on Mac - leaves plenty of space for on-device models.

Screenshots

Purchasing

Zotto can be used free of charge with some limitations (one conversation, no custom tools and a few other limitations).

All features can be unlocked for £69 plus local taxes.

This is not a subscription . You can use any version released during your update period forever, even after the update period ends.