Recently I spent some time trying to determine how much load a Xojo Web app could sustain and figured I’d share my journey here with everyone. Let’s start with the setup…

DigitalOcean (DO) Droplet

Debian 12 x86

Premium AMD w/ NVMe SSD

1 GB / 1 AMD CPU

25 GB NVMe SSDs

Not the very bottom of the barrel ($4 as of this writing), but moved up to the Premium tier for better specs and throughput ($7)

Web apps loaded via Lifeboat with an nginx backend and no load balancing

Testing Location

I’m just doing this from home

MacBook Pro M1 Pro

Fiber 1GB although Wi-Fi to my Mac (802.11ac w/ Tx rate of 780 Mbps)

I’m about 100 geographic miles to the DO data center

Be aware that this is not normally how you would do in depth load testing where you’ll have multiple (hundreds/thousands) of clients geographically dispersed to add load to your web app.

Testing Product Selection

There’s lots of tools out there from jMeter to Locust, but ultimately I wanted something I didn’t need to invest a lot of time in although I knew this would limit my results. I started with hey before I found its successor, oha. The big plus here is that there’s a precompiled binary and you don’t have to go off using make or creating a bunch of dependencies for Java, Python, etc. Note for anyone who’s not a terminal person, both hey and oha will download a binary that won’t run on the Mac until you “chmod +x ./oha” to set the executable bit.

Testing Methodology

I ended using the example Eddie’s Electronics web app that comes with Xojo. Note that you’ll find it within Samples Apps/Eddie’s Electronics.

I’m not sure how much caching if any DO or nginx is running out of the gate, so I wanted to do the test “warm” as this is the most likely real world scenario. So this means I did one test run that was discarded and took the result from a second one.

Of course I was curious, so I ran this test across Xojo 2019r3.2 (Web 1.0), 2023r4 and 2024r1.62274. Be aware that only the latter two Web 2.0 products are directly comparable but it’s still nevertheless interesting to see the contrast with Web 1.0.

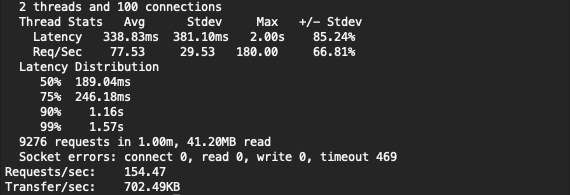

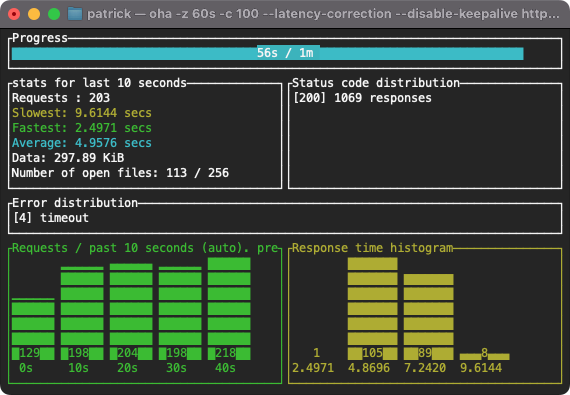

Per the oha recommendations, I ran the following command to test things out…

./Downloads/oha -z 60s -c 100 --latency-correction --disable-keepalive https://myserver.com

Note that they did recommend doing a -q but I don’t believe Eddie’s Electronics is using queries so I disregarded this command.

Results

Here are the terminal results from each of the runs…

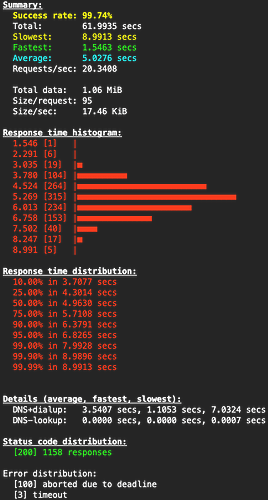

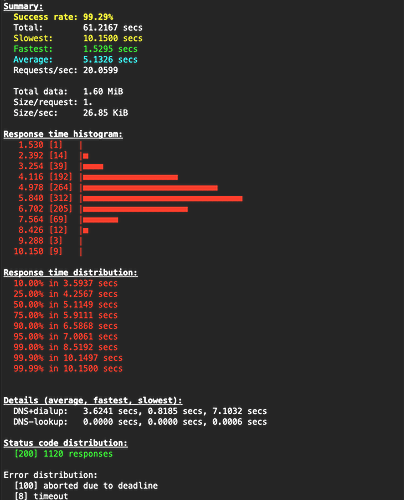

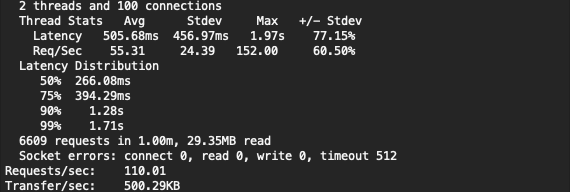

Xojo 2019r3.2 (Web 1.0)

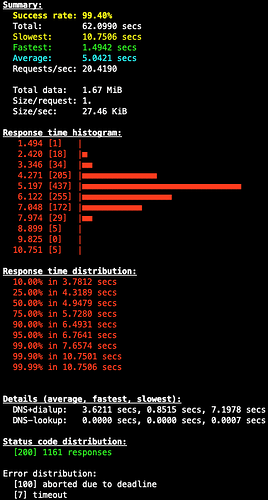

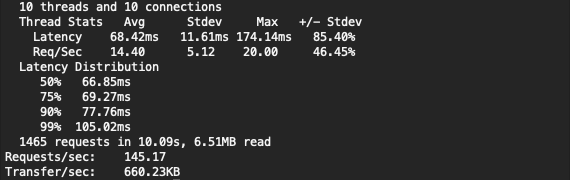

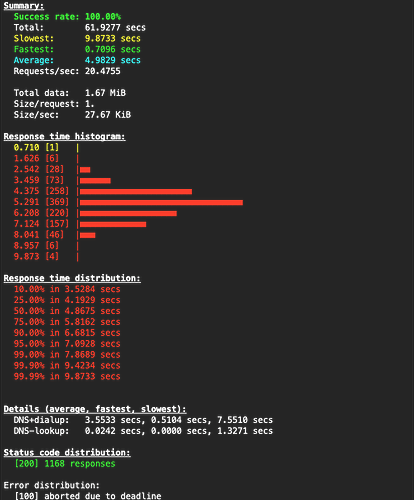

Xojo 2023r4 (Web 2.0)

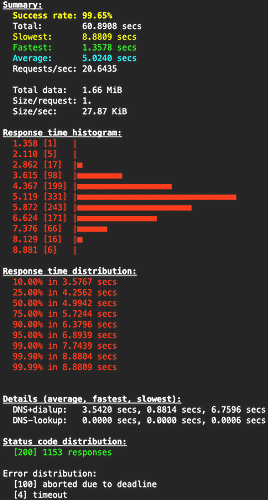

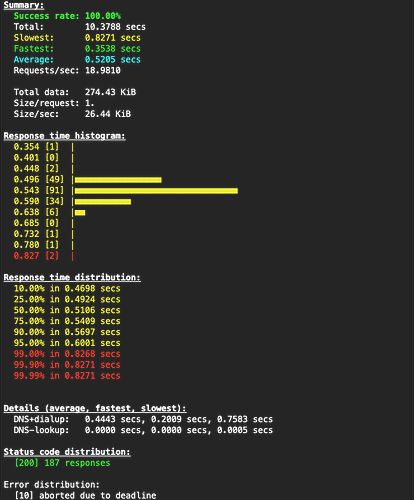

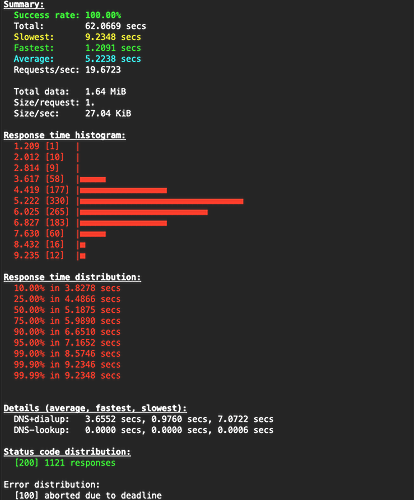

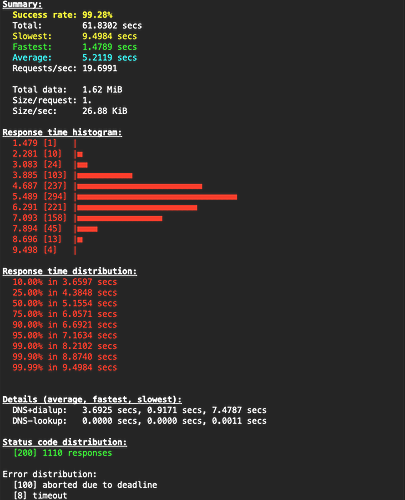

Xojo 2024r1.62274 (Web 2.0)

Discussion

So the first thing to call out is probably the first deficiency here of the testing just hitting the home page and not navigating through the interface at all. As a result, the findings aren’t going to be super conclusive beyond how quickly or reliably the homepage loads.

The first thing that stands out, is how different Web 1.0 is from Web 2.0. Not only is the size/request drastically down under 2.0 but the size/sec is higher which should mean much better efficiency and throughput versus 1.0. Of course the caveat is that the total data size is greater under 2.0, but I think this has to do with the project itself being different than 1.0.

Next it looks like the requests/sec are about the same across all three tests, although 2023r4 did have slightly more timeouts. Note that the “aborted due to deadline” isn’t an error per se, but how many connections were ceased once the 60 sec test time was up.

It’s also interesting to see a majority (75%) of all requests happening in the low 5 sec range across all tests with the slowest and fastest times about the same for all.

Next Steps

So on my side, I was more curious than anything else about how much load 2.0 could take. In this very rudimentary test, it appears that having 50 concurrent users hitting the homepage of Eddie’s Electronics at the same time, is very doable. Next step for me might be to crank up the number of requests and concurrent requests on my own web app to see if I can gauge at what point I should move up to higher tiers of DO droplets, add more load balancing, etc. At the very least, I now have a baseline that I can work against.