Sometimes an easy tasks turns out not be that easy at all – even more when you confuse yourself with formulas. Hope someone can help me untie my synapsis jam:

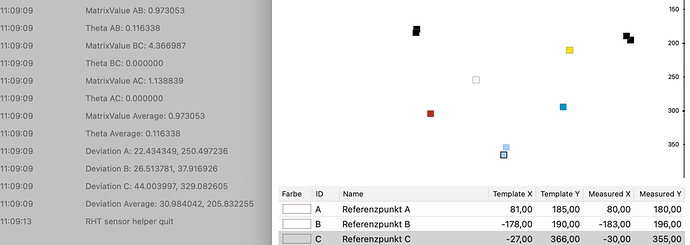

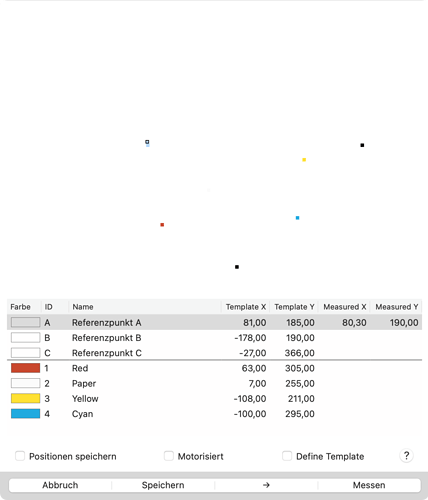

I have a list of coordinates, representing patches on a piece of paper. The three first points are random reference points, the others color patches to be scanned. TemplateX/Y are the positions on the original template, measured those taken from the current print.

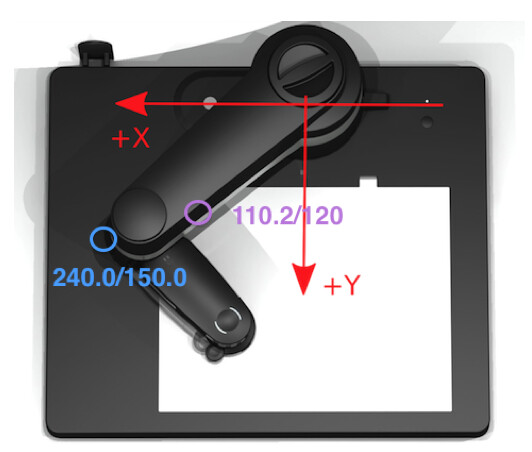

The coordinate system used by the instrument is a bit weird, rotated and mirrored vertically, with x increasing to the left and x = 0 somewhere in the middle. But that should not matter, I guess. Just to explain why the selected reference point A (blue with black border in the upper left) is a bit higher and more to the left than its measured values (light blue without border directly below the first spot in the picture).

Of course it will not be possible for an operator to mount the template exactly each time, so the idea is to analyse the transformation mask and apply that result to the patches, so any necessary translation, rotation and maybe small scaling will be applied to them.

Bad point is my maths are up to the point of working with right angled triangles. The reference points are not, or at least do not have to be. Any hint to a good place to start or even more?

Thanks a lot!

Here is a good site to begin with, with live examples and code:

Here is a good site to begin with, with live examples and code: