Background:-

I am new here, retired, but do about 20 hours/week of pro bono work and consulting. I have been using XOJO on a licenced iMac for a few weeks, and generally enjoying it. The development work that I do is mostly for non-profits with 5-50 users. Nearly all have Wi-Fi LANS, and often a small server. Many users have tablets, some have laptops, and all have smart phones. Most of these organizations need a small database. Many of them would find setting up and running a traditional database server, or using a Xojo Cloud Hosting Package difficult to finance (CAPEX is easy, OPEX isn’t). Their needs might be served by web applications.

I have tried a few things out, and am considering upgrading my licence. What follows is lengthy…

So far:-

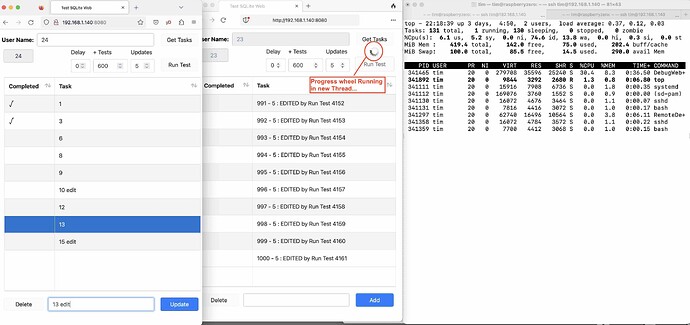

It looks as though SQLite might do the job for web apps that I might develop. To test this I modified Dana Brown’s web Task SQLite Example by adding a button to start long-running tests using inserts, reads, updates and deletes to stress the system (The usual CRUD stuff). Changed the database by adding Log Tables to track significant changes (and make SQLite work harder) and put much of “the code” into Triggers. I tried to reduce the traffic by sending the userID instead of userName where possible, but increased it by pulling back all relevant records to the TaskList to ensure that (multi) users saw “a single truth”. The Add button was extended to allow each Task to be edited. The number of rows added in a chosen time can be modified by the form. Each added row was edited 6 times, a trigger incremented an “edited” integer field (for later concurrency control function). After 10-50 rows each additional row for user was then deleted. Triggers added an “OLD.record” row to a log table for each edit and delete, the last 3 rows from the log were retreieved and their values inserted into strings. I assumed up to 10 concurrent users - Each adding, retrieving, editing and deleting. Trying this out on the iMac with 3 browser clients was much too fast (640 rows added/sec which gave an additional 3,800 inserted records, 1,900 retrieves and 640 deletes for each row). The run used about 70% of one CPU (3.6 GHz Quad-Core Intel Core i3 with 16 GB RAM). To slow the system down, anything that touched the database was put inside a transaction with small delay timers (100-250 milliseconds) after each commit. Transactions were a bad idea, as I got a lot of time-outs - Removing them and using WAL fixed that. The delay timer pushed the single CPU usage up to ~98%. The changes got me down to near to the 2 rows added per second I was looking for. This plateaued with 2+ connections (3-9 concurrent PCs, iPads, and iPhones with Safari, Chrome, and Firefox browsers).

Raspberry pi 4B Quad-core 64-bit 1.5 GHz, 2GB RAM, Raspbian OS Lite - Run Remotely:-

Taking out the delay timers I eventually got a plateau of ~20 total added rows/second for 2-5 concurrent clients (which gave an additional 120 inserted records, 60 retrieves and 20 deletes for each row). The “disk” was a Sandisk Extreme Pro 64Gb MicroSD. Initially a Lexar 32GB V60 card gave ~16 rows/sec - Replacing the Sandisk with a “fast” Sandisk 64 150MB/s USB Flash drive, and then a Samsung 1GB/sec 1TB SSD did not improve performance; indicating that it was limited by the CPU or network interface. Using WiFi instead of ethernet gave the same performance - Therefore probably CPU. To test this…

Raspberry pi Zero 2W, Quad-core 64-bit 1 GHz, 512MB RAM, Raspbian OS Lite - Run Remotely:-

Using the same set up with WiFi I got ~14 added rows/second, confirming that performance probably depends on CPU.

Feasibility of Raspberry pi?:-

Using a “worst case” scenario, the pi Zero was used for subsequent tests. Typically there was ~100MB memory free, with no significant swap. Results were confirmed at a total of ~14 added rows/sec (which gave an additional 80 inserted records, 40 retrieves and 14 deletes for each row). System was stable at this loading for at least 8 hrs 20 mins (overnight, 2 concurrent test clients). Maximum core temp 46C with passively cooled Aluminium case (unloaded base 37C, room temp max 27C).

Backups: Obviously, a “single user” database with multiple users may be difficult to maintain/backup in a production environment. Running the same 14 rows/sec, I successfully tried several back up scenarios (.backup ; .dump; and; and VACUUM INTO). Results for VACUUM INTO: 9.7MB sqlite file with 91,752 rows in log table backed up in a few seconds; a 566.6MB sqlite file with 5,268,886 rows backed up in 93 seconds - This was whilst running 2 test clients with a 3rd live web client, allowing me to manually add rows with a response of ~3 sec/row, delete at ~1 sec, and edit at ~2 sec (There were 6-12 rows on screen, each change retrieved all relevent records to the ListBox).

Conclusions:-

I was surprised as to how capable XOJO with a web interface is for a simple SQLite CRUD application. Apparently it has more power than the mid-range multiuser terminal and client-server relational systems that I created in the 1980-2000s.

As a back-end for small systems, is a pi be feasible? The use of WAL seems to have fixed multi-user and backup issues that I have struggled with in the past.

I won’t recommend a Raspberry pi Zero for production use, as it looks ridiculous; but it would be capable of running a small work group - The numbers indicate that a “user transaction” that takes 10 seconds to enter with background tasks could (in theory!!) be run by 100 simultaneous users. Or at 10 rows/sec that is >250,000 in a working day (I’d still use SQL Server/PostgreSQL for that!). BUT, for a few hundred or a few thousand rows each day added between 10 users - A web app with SQLite, a Raspberry pi, and an external disk is probably a practical solution…

Observations:-

The premise that a web app with adequate performance on a Wi-Fi LAN using an SQLite database seems very reasonable for similar cases.

In testing, average screen responses for CRUD operations is ~2 seconds. This is is within the old 4 sec IBM screen response guidelines. Web users often expect slow responses - It might be improved by further tuning and using the ListBox “properly”.

Production systems may require an additional simple, single, Admin web front end to control user access and database backup.

Transactions save a lot of network traffic and grief. In the early 1990s I learnt to keep as much logic as possible in the database layer - I had several bad experiences with contractors putting logic into the client presentation layer and stuffing up data. It happened again when middleware became popular later. My rule of thumb became “Work out the project parameters, specify the hardware at more than twice the design specs; the database will need three times what you thought - Do not try to make up any shortfall later when you have a lightly specced system by pushing processing/logic out to client machines”…

Learning how to do these experiments, producing the test system, and doing it, took about 40 hours.

Outstanding Questions:-

The web product back end seems OK. Would a Console back-end be an improvement, considering the additional work required?

Is it faster to use a compiled app rather than the Debugger on similar hardware?

Is it possible to create complex band-type reports with Web clients? MS Access was good at this - I’m finding it difficult to get my head around how I can generate appropriate headers, footers, tables and page breaks…

My Background:-

- 1971 Learnt FORTRAN as part of a Chemistry course.

- 1970s Used Dartmouth type BASICs and proprietary languages on Laboratory Instrumentation.

Learnt some C and shell coding on obscure BSDs and DEC PDPs and Data General minis. - 1980s Built PC-LAN and Novell networks; DEC RdB, Informix, and Oracle (V4-6) DBs and later Sybase databases, mostly with PC clients.

Learnt (and liked) R:Base, and used it to build Lab and Business management systems.

Often used QuickBASIC to talk to instruments and produce data formatted for big systems. - 1990s Most of customer base was businesses with a turnover of $1-10 Million. Used VB 3-4 particularly to talk to databases.

Discovered (that for our customers) it was often much quicker and cheaper to develop in MS Access. For larger systems used an Access front end talking to MS SQL Server - Meaning I pretty much stopped VB development. - 2004 Semi-Retirement with some pro bono work (Often have to assess very large spreadsheets - Use SQLite to tidy up data).

- 2009 Moved mostly to a MacBook Pro.

- 2011 Retirement - Pro bono work only, occasionally run Windows XP/10 in Parallels on an Intel iMac.

- Now - Use the iMac, iPads, iPhone, a Raspberry Pi 4 (and an old Pi V1 that has just been replaced by the pi Zero).

Likely to upgrade iMac to Silicon later.

Will probably stop running Parallels. I may be getting too old to wrestle with Microsoft stuff…

If you have made it down here, to the end - Thank you! I would be grateful for any observations, advice or criticisms…

The Hardware:-