What if… we use claude to create a plugin… I personally haven’t developed plugins yet so I wonder if it will work? I haven’t the tools to the test but if someone is willing to dive in…

a very simple prompt generated:

Can you build a xojo plugin for the llama.cpp and create a high level interface?

the plugin code… LlamaCppPlugin.cpp

#include "XojoPlugin.h"

#include "llama.h"

#include <string>

#include <vector>

#include <memory>

// Plugin class definition

class LlamaCppPlugin {

private:

llama_model* model;

llama_context* ctx;

llama_model_params model_params;

llama_context_params ctx_params;

std::vector<llama_token> tokens;

bool initialized;

public:

LlamaCppPlugin() : model(nullptr), ctx(nullptr), initialized(false) {

model_params = llama_model_default_params();

ctx_params = llama_context_default_params();

}

~LlamaCppPlugin() {

cleanup();

}

void cleanup() {

if (ctx) {

llama_free(ctx);

ctx = nullptr;

}

if (model) {

llama_free_model(model);

model = nullptr;

}

initialized = false;

}

bool loadModel(const char* model_path, int n_ctx = 2048, int n_gpu_layers = 0) {

cleanup();

llama_backend_init();

model_params.n_gpu_layers = n_gpu_layers;

model = llama_load_model_from_file(model_path, model_params);

if (!model) {

return false;

}

ctx_params.n_ctx = n_ctx;

ctx_params.seed = -1;

ctx = llama_new_context_with_model(model, ctx_params);

if (!ctx) {

llama_free_model(model);

model = nullptr;

return false;

}

initialized = true;

return true;

}

const char* generate(const char* prompt, int max_tokens = 256,

float temperature = 0.7f, float top_p = 0.9f,

int top_k = 40) {

if (!initialized) {

return "Error: Model not loaded";

}

static std::string result;

result.clear();

tokens.clear();

tokens.resize(prompt ? strlen(prompt) + 1024 : 1024);

int n_tokens = llama_tokenize(model, prompt, strlen(prompt),

tokens.data(), tokens.size(), true, false);

tokens.resize(n_tokens);

llama_sampling_params sparams = llama_sampling_default_params();

sparams.temp = temperature;

sparams.top_p = top_p;

sparams.top_k = top_k;

llama_sampler* smpl = llama_sampler_chain_init(sparams);

llama_sampler_chain_add(smpl, llama_sampler_init_top_k(top_k));

llama_sampler_chain_add(smpl, llama_sampler_init_top_p(top_p, 1));

llama_sampler_chain_add(smpl, llama_sampler_init_temp(temperature));

llama_sampler_chain_add(smpl, llama_sampler_init_dist(LLAMA_DEFAULT_SEED));

llama_batch batch = llama_batch_init(ctx_params.n_ctx, 0, 1);

for (int i = 0; i < n_tokens; i++) {

llama_batch_add(batch, tokens[i], i, {0}, false);

}

batch.logits[batch.n_tokens - 1] = true;

if (llama_decode(ctx, batch) != 0) {

llama_batch_free(batch);

llama_sampler_free(smpl);

return "Error: Failed to decode";

}

int n_cur = batch.n_tokens;

for (int i = 0; i < max_tokens; i++) {

llama_token new_token = llama_sampler_sample(smpl, ctx, -1);

if (llama_token_is_eog(model, new_token)) {

break;

}

char buf[256];

int n = llama_token_to_piece(model, new_token, buf, sizeof(buf), 0, false);

if (n < 0) {

break;

}

result.append(buf, n);

llama_batch_clear(batch);

llama_batch_add(batch, new_token, n_cur, {0}, true);

n_cur++;

if (llama_decode(ctx, batch) != 0) {

break;

}

}

llama_batch_free(batch);

llama_sampler_free(smpl);

return result.c_str();

}

int getContextSize() const {

return initialized ? llama_n_ctx(ctx) : 0;

}

bool isLoaded() const {

return initialized;

}

};

static std::unique_ptr<LlamaCppPlugin> g_plugin;

extern "C" {

REALpluginGlobal(void, LlamaCpp_Initialize)(void) {

g_plugin = std::make_unique<LlamaCppPlugin>();

}

REALpluginGlobal(void, LlamaCpp_Cleanup)(void) {

g_plugin.reset();

}

REALpluginGlobal(REALboolean, LlamaCpp_LoadModel)(REALstring model_path,

int32_t n_ctx,

int32_t n_gpu_layers) {

if (!g_plugin) {

g_plugin = std::make_unique<LlamaCppPlugin>();

}

const char* path = REALGetCString(model_path);

bool success = g_plugin->loadModel(path, n_ctx, n_gpu_layers);

REALUnlockString(model_path);

return success ? 1 : 0;

}

REALpluginGlobal(REALstring, LlamaCpp_Generate)(REALstring prompt,

int32_t max_tokens,

float temperature,

float top_p,

int32_t top_k) {

if (!g_plugin) {

return REALBuildStringWithEncoding("Error: Plugin not initialized",

kREALTextEncodingUTF8);

}

const char* prompt_str = REALGetCString(prompt);

const char* result = g_plugin->generate(prompt_str, max_tokens,

temperature, top_p, top_k);

REALUnlockString(prompt);

return REALBuildStringWithEncoding(result, kREALTextEncodingUTF8);

}

REALpluginGlobal(int32_t, LlamaCpp_GetContextSize)(void) {

return g_plugin ? g_plugin->getContextSize() : 0;

}

REALpluginGlobal(REALboolean, LlamaCpp_IsModelLoaded)(void) {

return (g_plugin && g_plugin->isLoaded()) ? 1 : 0;

}

REALpluginGlobal(void, LlamaCpp_UnloadModel)(void) {

if (g_plugin) {

g_plugin->cleanup();

}

}

}

static REALmethodDefinition methods[] = {

{(REALproc)LlamaCpp_Initialize, REALnoImplementation,

"LlamaCpp.Initialize()", REALconsoleSafe},

{(REALproc)LlamaCpp_LoadModel, REALnoImplementation,

"LlamaCpp.LoadModel(modelPath As String, contextSize As Integer = 2048, gpuLayers As Integer = 0) As Boolean",

REALconsoleSafe},

{(REALproc)LlamaCpp_Generate, REALnoImplementation,

"LlamaCpp.Generate(prompt As String, maxTokens As Integer = 256, temperature As Single = 0.7, topP As Single = 0.9, topK As Integer = 40) As String",

REALconsoleSafe},

{(REALproc)LlamaCpp_GetContextSize, REALnoImplementation,

"LlamaCpp.GetContextSize() As Integer", REALconsoleSafe},

{(REALproc)LlamaCpp_IsModelLoaded, REALnoImplementation,

"LlamaCpp.IsModelLoaded() As Boolean", REALconsoleSafe},

{(REALproc)LlamaCpp_UnloadModel, REALnoImplementation,

"LlamaCpp.UnloadModel()", REALconsoleSafe},

{(REALproc)LlamaCpp_Cleanup, REALnoImplementation,

"LlamaCpp.Cleanup()", REALconsoleSafe}

};

REALpluginDefinition pluginDefinition = {

kCurrentREALControlVersion, "LlamaCppPlugin", methods,

sizeof(methods) / sizeof(REALmethodDefinition),

0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0

};

REALplugin REALGetPluginInfo(void) {

return &pluginDefinition;

}

and a xojo class to test the plugin:

Public Class LlamaModel

Private mModelPath As String

Private mIsLoaded As Boolean

Public ContextSize As Integer = 2048

Public GPULayers As Integer = 0

Public Temperature As Single = 0.7

Public TopP As Single = 0.9

Public TopK As Integer = 40

Public MaxTokens As Integer = 256

Sub Constructor(modelPath As String)

mModelPath = modelPath

mIsLoaded = False

LlamaCpp.Initialize()

End Sub

Sub Destructor()

If mIsLoaded Then

UnloadModel()

End If

LlamaCpp.Cleanup()

End Sub

Function LoadModel() As Boolean

If mModelPath.Trim = "" Then

Return False

End If

mIsLoaded = LlamaCpp.LoadModel(mModelPath, ContextSize, GPULayers)

Return mIsLoaded

End Function

Sub UnloadModel()

LlamaCpp.UnloadModel()

mIsLoaded = False

End Sub

Function Generate(prompt As String) As String

If Not mIsLoaded Then

Return "Error: Model not loaded. Call LoadModel() first."

End If

Return LlamaCpp.Generate(prompt, MaxTokens, Temperature, TopP, TopK)

End Function

Function GenerateWithParams(prompt As String, maxTokens As Integer, _

temperature As Single, topP As Single, _

topK As Integer) As String

If Not mIsLoaded Then

Return "Error: Model not loaded. Call LoadModel() first."

End If

Return LlamaCpp.Generate(prompt, maxTokens, temperature, topP, topK)

End Function

Function Chat(userMessage As String, systemPrompt As String = "") As String

Dim fullPrompt As String

If systemPrompt <> "" Then

fullPrompt = "System: " + systemPrompt + Chr(10) + Chr(10) + _

"User: " + userMessage + Chr(10) + Chr(10) + _

"Assistant: "

Else

fullPrompt = "User: " + userMessage + Chr(10) + Chr(10) + "Assistant: "

End If

Return Generate(fullPrompt)

End Function

Function Ask(question As String, context As String = "") As String

Dim prompt As String

If context <> "" Then

prompt = "Context: " + context + Chr(10) + Chr(10) + _

"Question: " + question + Chr(10) + Chr(10) + _

"Answer: "

Else

prompt = "Question: " + question + Chr(10) + Chr(10) + "Answer: "

End If

Return Generate(prompt)

End Function

Function GetContextSize() As Integer

Return LlamaCpp.GetContextSize()

End Function

Function IsLoaded() As Boolean

Return LlamaCpp.IsModelLoaded()

End Function

Function GetModelPath() As String

Return mModelPath

End Function

End Class

' USAGE EXAMPLE:

Sub Example()

Dim model As New LlamaModel("/path/to/model.gguf")

model.GPULayers = 32

If model.LoadModel() Then

Dim response As String = model.Chat("Hello!", "You are helpful.")

MsgBox(response)

End If

End Sub

and a CMakeList:

cmake_minimum_required(VERSION 3.15)

project(LlamaCppXojoPlugin)

set(CMAKE_CXX_STANDARD 17)

set(CMAKE_CXX_STANDARD_REQUIRED ON)

if(APPLE)

set(CMAKE_SHARED_LINKER_FLAGS "${CMAKE_SHARED_LINKER_FLAGS} -bundle -flat_namespace -undefined suppress")

elseif(WIN32)

# Windows settings

else()

# Linux settings

endif()

if(EXISTS "${CMAKE_CURRENT_SOURCE_DIR}/llama.cpp")

add_subdirectory(llama.cpp)

else()

find_library(LLAMA_LIB llama REQUIRED)

endif()

set(XOJO_SDK_PATH "${CMAKE_CURRENT_SOURCE_DIR}/XojoPluginSDK" CACHE PATH "Path to Xojo Plugin SDK")

include_directories(

${XOJO_SDK_PATH}/Headers

${CMAKE_CURRENT_SOURCE_DIR}/llama.cpp

)

add_library(LlamaCppPlugin SHARED LlamaCppPlugin.cpp)

if(TARGET llama)

target_link_libraries(LlamaCppPlugin PRIVATE llama)

else()

target_link_libraries(LlamaCppPlugin PRIVATE ${LLAMA_LIB})

endif()

if(APPLE)

set_target_properties(LlamaCppPlugin PROPERTIES

BUNDLE TRUE

BUNDLE_EXTENSION "xojo_plugin"

)

elseif(WIN32)

set_target_properties(LlamaCppPlugin PROPERTIES

PREFIX ""

SUFFIX ".xojo_plugin"

)

else()

set_target_properties(LlamaCppPlugin PROPERTIES

PREFIX ""

SUFFIX ".xojo_plugin"

)

endif()

and the build commands:

# macOS/Linux

mkdir build && cd build

cmake .. -DXOJO_SDK_PATH=../XojoPluginSDK

cmake --build . --config Release

# Windows

mkdir build && cd build

cmake .. -G "Visual Studio 17 2022" -DXOJO_SDK_PATH="..\XojoPluginSDK"

cmake --build . --config Release

and a complete documentation:

Xojo LlamaCpp Plugin

A high-level Xojo plugin for running LLaMA models using llama.cpp. This plugin allows you to integrate powerful AI language models directly into your Xojo applications.

Features

-

Easy Integration - Simple high-level API for Xojo

Easy Integration - Simple high-level API for Xojo

-

GPU Acceleration - Support for Metal, CUDA, and other GPU backends

GPU Acceleration - Support for Metal, CUDA, and other GPU backends

-

Multiple Modes - Generate, Chat, and Q&A interfaces

Multiple Modes - Generate, Chat, and Q&A interfaces

-

Customizable - Adjust temperature, top-p, top-k, and more

Customizable - Adjust temperature, top-p, top-k, and more

-

Self-Contained - No external dependencies once built

Self-Contained - No external dependencies once built

Quick Start

' Load and use a model in 3 lines

Dim model As New LlamaModel("path/to/model.gguf")

If model.LoadModel() Then

Dim response As String = model.Generate("Hello, how are you?")

End If

Installation

1. Prerequisites

-

Xojo (any recent version)

-

CMake 3.15 or later

-

C++ Compiler (Xcode on Mac, Visual Studio on Windows, GCC on Linux)

-

Xojo Plugin SDK - Download here

2. Build llama.cpp

git clone https://github.com/ggerganov/llama.cpp

cd llama.cpp

mkdir build && cd build

cmake ..

cmake --build . --config Release

cd ../..

3. Build the Plugin

macOS:

mkdir build && cd build

cmake .. -DXOJO_SDK_PATH=../XojoPluginSDK

cmake --build . --config Release

Windows:

mkdir build && cd build

cmake .. -G "Visual Studio 17 2022" -DXOJO_SDK_PATH="..\XojoPluginSDK"

cmake --build . --config Release

Linux:

mkdir build && cd build

cmake .. -DXOJO_SDK_PATH=../XojoPluginSDK

cmake --build . --config Release

4. Install Plugin

Copy the generated .xojo_plugin file to:

-

macOS: ~/Library/Application Support/Xojo/Plugins/

-

Windows: C:\Users\YourName\AppData\Roaming\Xojo\Plugins\

-

Linux: ~/.local/share/Xojo/Plugins/

Restart Xojo.

Usage

Basic Example

Sub TestModel()

Dim model As New LlamaModel("/path/to/model.gguf")

' Configure (optional)

model.ContextSize = 4096

model.GPULayers = 32

model.Temperature = 0.8

' Load model

If model.LoadModel() Then

' Generate text

Dim result As String = model.Generate("Once upon a time")

MsgBox(result)

Else

MsgBox("Failed to load model")

End If

End Sub

Chat Interface

Sub ChatExample()

Dim model As New LlamaModel("/path/to/model.gguf")

If model.LoadModel() Then

Dim response As String = model.Chat( _

"What is the capital of France?", _

"You are a helpful assistant." _

)

MsgBox(response)

End If

End Sub

Q&A with Context

Sub QandAExample()

Dim model As New LlamaModel("/path/to/model.gguf")

If model.LoadModel() Then

Dim context As String = "Paris is the capital of France. It has 2.1 million residents."

Dim answer As String = model.Ask("How many people live there?", context)

MsgBox(answer)

End If

End Sub

Custom Parameters

Sub CustomExample()

Dim model As New LlamaModel("/path/to/model.gguf")

If model.LoadModel() Then

Dim result As String = model.GenerateWithParams( _

"Explain quantum physics", _

500, ' maxTokens

0.5, ' temperature (lower = more focused)

0.95, ' topP

40 ' topK

)

MsgBox(result)

End If

End Sub

API Reference

LlamaModel Class

Properties

-

ContextSize As Integer - Context window size (default: 2048)

-

GPULayers As Integer - Number of layers to offload to GPU (default: 0)

-

Temperature As Single - Sampling temperature (default: 0.7)

-

TopP As Single - Nucleus sampling threshold (default: 0.9)

-

TopK As Integer - Top-K sampling (default: 40)

-

MaxTokens As Integer - Maximum tokens to generate (default: 256)

Methods

Constructor(modelPath As String)

- Initialize the model with a path to a GGUF file

LoadModel() As Boolean

UnloadModel()

- Unload model and free memory

Generate(prompt As String) As String

GenerateWithParams(prompt As String, maxTokens As Integer, temperature As Single, topP As Single, topK As Integer) As String

- Generate with custom parameters

Chat(userMessage As String, systemPrompt As String = "") As String

Ask(question As String, context As String = "") As String

GetContextSize() As Integer

- Get current context window size

IsLoaded() As Boolean

GetModelPath() As String

Downloading Models

Download GGUF models from Hugging Face:

Recommended Models:

-

Llama-2-7B-Chat - General purpose, 4GB

-

Mistral-7B-Instruct - High quality, 4GB

-

Phi-2 - Fast, compact, 1.5GB

-

CodeLlama - Programming tasks, 4GB

Example Download:

# Using Hugging Face CLI

pip install huggingface-hub

huggingface-cli download TheBloke/Llama-2-7B-Chat-GGUF llama-2-7b-chat.Q4_K_M.gguf

GPU Acceleration

Enable GPU support during build:

Metal (macOS):

cmake .. -DLLAMA_METAL=ON

CUDA (NVIDIA):

cmake .. -DLLAMA_CUDA=ON

ROCm (AMD):

cmake .. -DLLAMA_HIPBLAS=ON

Then set GPULayers property:

model.GPULayers = 32 ' Offload 32 layers to GPU

Parameter Guide

Temperature

-

0.1-0.3 - Very focused, deterministic

-

0.7 - Balanced (default)

-

1.0-1.5 - Creative, varied

Context Size

-

512 - Short conversations

-

2048 - Default, good balance

-

4096+ - Long context, more memory

GPU Layers

-

0 - CPU only

-

16-32 - Hybrid CPU/GPU

-

99 - All layers on GPU

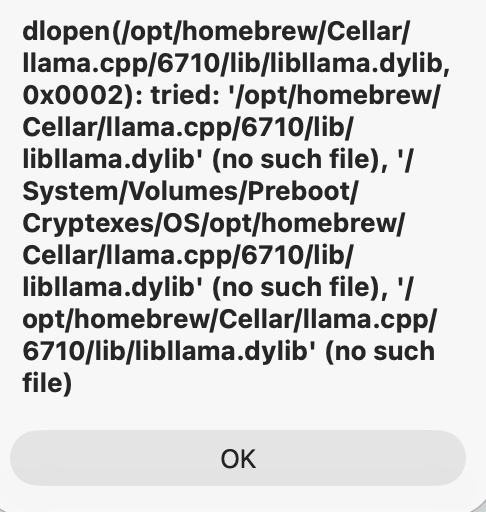

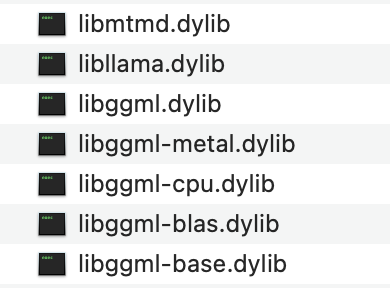

Troubleshooting

Plugin doesn’t appear in Xojo

-

Restart Xojo after installation

-

Check plugin is in correct folder

-

On macOS: codesign --force --deep --sign - plugin.xojo_plugin

Model fails to load

-

Verify GGUF format

-

Check file path

-

Ensure sufficient RAM

Slow generation

-

Increase GPULayers

-

Use smaller model

-

Reduce ContextSize

Out of memory

License

This plugin is provided as-is. llama.cpp is MIT licensed.

Credits

Support

For issues with:

-

Plugin building: Check CMakeLists.txt comments

-

Xojo integration: Verify plugin installation

-

Model loading: Check llama.cpp documentation

-

Performance: Adjust parameters, enable GPU

End of Documentation…

As I don’t know c and i didn’t created the code don’t come back to me… but it’s kind of amazing what AI can deliver as a starting point…

That’s also why I would prefer @Christian_Schmitz plugins and buy his license because he knows what he does…