you must check that the training txt files are on the desktop, or you get a fatal error 2 …

i got the fatal error because the file name was “newerData.txt” but that one doesn’t exist in the repository. and yes the file needs to be on the desktop.

ELAPSED 0.6122508

on console version, on battery, with m2 max (ventura)

Thanks for the discussion! I have fixed the newerdata.txt issue, I was playing with a few different machine learning datasets. It is now winedata.txt. Also, I have uploaded main.cpp so that you can test elapsed on your machines, I have a feeling the turbo on a desktop mac would roast my laptop XD. Still the first native ML in XOJO that I have found.

built 2023 r4 with pragmas on my machine was much faster. I have updated my YouTube description to say that C++ is really only two or three times as fast, as well as included the new Github link.

If you are interested in machine learning, and neural nets in particular, and how to apply them to problems, I would highly recommend the following YouTube channel.

I find his explanations of complex machine learning mathematics very easy to understand.

But to answer you question, neural nets are a fundamental component for classificaion problems (e.g. computer vision), generative AI (such as ChatGPT), self-driving cars, etc.

Dear All.

For my point, this thread is one of the most interesting I have seen. I thank Alexander Kostyak for starting it.

I see with great emotion that XOJO is not as efficient as C. However, it does come close and competes with other compilers.

As Alexander Kostyak mentions:

For most applications, it is sufficient to have these times. If you want to maximize the final process, C is undoubtedly suitable. Otherwise, you can take the advantage of XOJO.

Windows 11 x86-64 amd ryzen 7 3800x-8core cpu

Fun and Interesting, thanks!

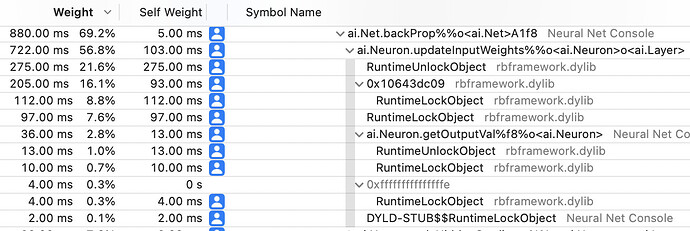

I ran the compiled console app (Intel, Core i9) under macOS Ventura using the Instruments.app Time Profiler to see where the CPU is being used:

Looks like Xojo’s RuntimeLockObject and RuntimeUnlockObject are taking a big chunk of time.

@Christian_Schmitz : do you have any ideas about how Xojo could optimize these two framework methods?

Most of the (non-xojo-framework time) was spent in the ai.neuron.updateInputWeights function:

#Pragma BackgroundTasks False

#Pragma BoundsChecking False

#Pragma StackOverflowChecking False

#Pragma NilObjectChecking False

Var oldDeltaWeight As Double

Var newDeltaWeight As Double

Var nLast As Integer = prevLayer.Neurons.LastIndex

For n As Integer = 0 To nLast

oldDeltaWeight = prevLayer.Neurons(n).m_outputWeights(m_myIndex).deltaWeight

newDeltaWeight = (eta*prevLayer.Neurons(n).getOutputVal*m_gradient) + (alpha*oldDeltaWeight)

prevLayer.Neurons(n).m_outputWeights(m_myIndex).deltaWeight = newDeltaWeight

prevLayer.Neurons(n).m_outputWeights(m_myIndex).weight = prevLayer.Neurons(n).m_outputWeights(m_myIndex).weight + newDeltaWeight

Next

Notice how this function spends a lot of time re-looking up objects. I wondered if one could cache these intermediate objects and increase performance?

Here’s version 2:

function updateInputWeights(prevLayer as Layer)

// v2 optimized by Mike D

#Pragma BackgroundTasks False

#Pragma BoundsChecking False

#Pragma StackOverflowChecking False

#Pragma NilObjectChecking False

Var oldDeltaWeight As Double

Var newDeltaWeight As Double

var neurons() as ai.Neuron = prevLayer.Neurons

Var nLast As Integer = neurons.LastIndex

For n As Integer = 0 To nLast

var neuron as ai.Neuron = neurons(n)

var outputWeights as ai.connection = neuron.m_outputWeights(m_myIndex)

oldDeltaWeight = outputWeights.deltaWeight

newDeltaWeight = (eta*neuron.getOutputVal*m_gradient) + (alpha*oldDeltaWeight)

outputWeights.deltaWeight = newDeltaWeight

outputWeights.weight = outputWeights.weight + newDeltaWeight

Next

end function

I think the optimized version is easier to read.

Is it faster?

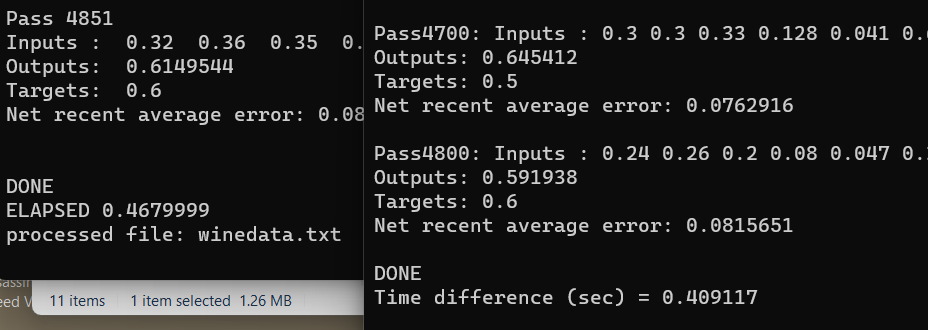

Console app, core i9 Intel, macOS Ventura:

1.10 seconds Original version

0.63 seconds modified version

Nearly 2x as fast!

Console app, M1 macbook Air, Sonoma

0.37 seconds modified version

Another improvement: change this code

TrainingData.Constructor

// look for data file using a relative path to the macOS executable:

#if DebugBuild

m_trainingDataFile = m_trainingDataFile.Open(App.ExecutableFile.parent.parent.child(filename))

#else

m_trainingDataFile = m_trainingDataFile.Open(App.ExecutableFile.parent.parent.parent.parent.child(filename))

#endif

and the project will run out of the box without needing you to copy the data file to the Desktop and give permissions.

Which compiler optimization do you use ?

The project came set to use Aggressive compilation and ARM build. I changed it to Universal for my testing, but left Aggressive set.

Xojo 2023 R4, macOS Ventura 13.6.3, tested on two machines: 15 Inch MacBook Pro (2019) 2.4GHz Core i9, and M1 MacBook Air (2020).

I have updated the Github with the pragmas, loop boundaries, and cached objects in updateInputWeights. Left and right is XOJO and C++ with these changes on my gigabyte laptop with intel i5. Very impressive.

on my macbook pro arm-64 m1 - 0.4021139

very impressive…![]()

This is even faster:

Public Sub updateInputWeights(prevLayer as Layer)

#Pragma BackgroundTasks False

#Pragma BoundsChecking False

#Pragma StackOverflowChecking False

#Pragma NilObjectChecking False

Var oldDeltaWeight As Double

Var newDeltaWeight As Double

Var neurons() As ai.Neuron = prevLayer.Neurons

Var outputWeights As connection

Var nLast As Integer = neurons.LastIndex

For n As Integer = 0 To nLast

outputWeights = neurons(n).m_outputWeights(m_myIndex)

oldDeltaWeight = outputWeights.deltaWeight

newDeltaWeight = (eta * neuron.getOutputVal * m_gradient) + (alpha * oldDeltaWeight)

outputWeights.deltaWeight = newDeltaWeight

outputWeights.weight = outputWeights.weight + newDeltaWeight

Next

End Sub

… and taking the loop boundary calculation out of the loop makes the console app run pretty on par with the C results:

DONE

ELAPSED 0.3934708

processed file: winedata.txt

Public Sub backProp(targetVals() as double)

#Pragma BackgroundTasks False

#Pragma BoundsChecking False

#Pragma StackOverflowChecking False

#Pragma NilObjectChecking False

//calculate overall net error

var outputLayer As layer = m_layers(m_layers.LastIndex)

var limit As Integer = m_layers(m_layers.LastIndex).Neurons.Count - 2

var limit2 As Integer = (m_layers(m_layers.LastIndex).Neurons.Count - 1)

var limit3 As Integer

m_error = 0.0

For n As Integer = 0 To limit //-2?

var delta as double = targetVals(n) - m_layers(m_layers.LastIndex).Neurons(n).getOutputVal

m_error = m_error + delta * delta

Next

m_error = m_error / limit2

m_error = Sqrt(m_error)

//implement recent average measurement

m_recentAverageError = (m_recentAverageError * m_recentAverageSmoothingFactor + m_error) / (m_recentAverageSmoothingFactor + 1.0)

limit = outputLayer.Neurons.Count - 2

// Calculate output layer gradient

For n As Integer = 0 To limit

outputLayer.Neurons(n).calcOutputGradients(targetVals(n))

Next

limit = m_layers.Count - 2

// Calculate gradients on hidden layer

For layerNum As Integer = limit DownTo 1

limit2 = m_layers(layerNum).Neurons.Count - 2

limit3 = layerNum + 1

For n As Integer = 0 To limit2

m_layers(layerNum).Neurons(n).calcHiddenGradients(m_layers(limit3))

next

next

limit = m_layers.Count - 1

// For all layers from outputs to first hidden layer, update connection weights

For layerNum As Integer = limit DownTo 1 //changed from -2

limit2 = m_layers(layerNum).Neurons.Count - 2

limit3 = layerNum - 1

For n As Integer = 0 To limit2

m_layers(layerNum).Neurons(n).updateInputWeights(m_layers(limit3))

next

next

End Sub

i get the same result.

I hope Xojo may be able to improve speed on some parts of the framework that are called often, then we may be able to enjoy the same speed (or at least real close) as c/c++ which could attract even more parties to Xojo!

ELAPSED 0.3612618

on m2 max macbook pro console mode

You need threads for that, your never going to be doing any kind of serious task without real threads. Its simply where hardware has gone. With big cores and small cores and lots of cores. So for serious work then even slower language would be fine as long as it has real threading.